Slack notifications with nmm

slack-alerts.Rmd

library(slurmtools)

#> Error in get(paste0(generic, ".", class), envir = get_method_env()) :

#> object 'type_sum.accel' not found

#>

#>

#> ── Needed slurmtools options ───────────────────────────────────────────────────

#> ✖ options('slurmtools.slurm_job_template_path') is not set.

#> ✖ options('slurmtools.submission_root') is not set.

#> ✖ options('slurmtools.bbi_config_path') is not set.

#> ℹ Please set all options for job submission defaults to work.

library(bbr)

library(here)

#> here() starts at /home/runner/work/slurmtools/slurmtools

nonmem = file.path(here::here(), "vignettes", "model", "nonmem")

options('slurmtools.submission_root' = file.path(nonmem, "submission-log"))

mod_number <- "1001"

if (file.exists(file.path(nonmem, paste0(mod_number, ".yaml")))) {

mod <- bbr::read_model(file.path(nonmem, mod_number))

} else {

mod <- bbr::new_model(file.path(nonmem, mod_number))

}Cut me some Slack

There is also functionality to pair nmm

with slack_notifier

and get messages sent directly to you via a slack bot. This requires you

to download the slack_notifier binaries. You can download the latest

release and extract the binary and again save it to

~/.local/bin.

Sys.which("snt")

snt

"/cluster-data/user-homes/matthews/.local/bin/snt"snt requires the slack bot OAuth token which is found

from https://api.slack.com/apps/<YOUR_APP_ID>/oauth?.

This can be saved in a file and fed into the snt with the

tokenFile flag.

Again, we need to update the 1001.toml file to get slack

notifications. If we look at the snt help message we see

that it should be called via

snt slack -c config_file -k decryption_key -a token -f tokenFile -e email -m message -t timestamp

the config_file can contain the key (if not using the default snt

key), and token file so you don’t need to repeatedly use that

information. I’ve saved the tokenFile location in a config file in

~/.local/bin/slack_notifier_settings.yaml and will use the

-c flag to point to that.

With this our call to snt would look like:

snt slack -c ~/.local/bin/slack_notifier_settings.yaml -e matthews@a2-ai.com -m "Hello"

The timestamp flag is needed if we want to reply to a message, which

to save on spamming alerts we want to do. However, since we can’t

possible know the timestamp of a message we have yet to send we don’t

have a way of specifying this argument now. This is what the use_stdout

argument for nmm alerter is for. It will capture the

standard out of the call to the alerter binary and parse it for

additional flags to use on subsequent calls. With all this in mind, we

can update our nmm config to achieve this slack

messaging.

slurmtools::generate_nmm_config(

mod,

watched_dir = "/cluster-data/user-homes/matthews/Packages/slurmtools/vignettes/model/nonmem",

output_dir = "/cluster-data/user-homes/matthews/Packages/slurmtools/vignettes/model/nonmem/in_progress",

overwrite = TRUE,

files_to_track = c("ext"),

bbi_config_path = "/cluster-data/user-homes/matthews/Packages/slurmtools/vignettes/model/nonmem/bbi.yaml",

alerter_opts = list(

alerter = normalizePath('~/.local/bin/snt'), #binary location of nmm,

command = "slack",

message_flag = "m", #This is the default so we don't need to specify it

use_stdout = TRUE, #captures output of snt -- which will include --timestamp aksfl;ajklajl;

args = list(email = "matthews@a2-ai.com", config = "/cluster-data/user-homes/matthews/.local/bin/slack_notifier_settings.yaml")

)

)

#> Warning in normalizePath("~/.local/bin/snt"):

#> path[1]="/home/runner/.local/bin/snt": No such file or directoryThis generates the following toml file:

model_number = '1001'

watched_dir = '/cluster-data/user-homes/matthews/Packages/slurmtools/vignettes/model/nonmem'

output_dir = '/cluster-data/user-homes/matthews/Packages/slurmtools/vignettes/model/nonmem/in_progress'

[alerter]

alerter = '/cluster-data/user-homes/matthews/.local/bin/snt'

command = 'slack'

message_flag = 'm'

use_stdout = true

[alerter.args]

email = 'matthews@a2-ai.com'

config = '/cluster-data/user-homes/matthews/.local/bin/slack_notifier_settings.yaml'With alert = 'Slack' and email set in the

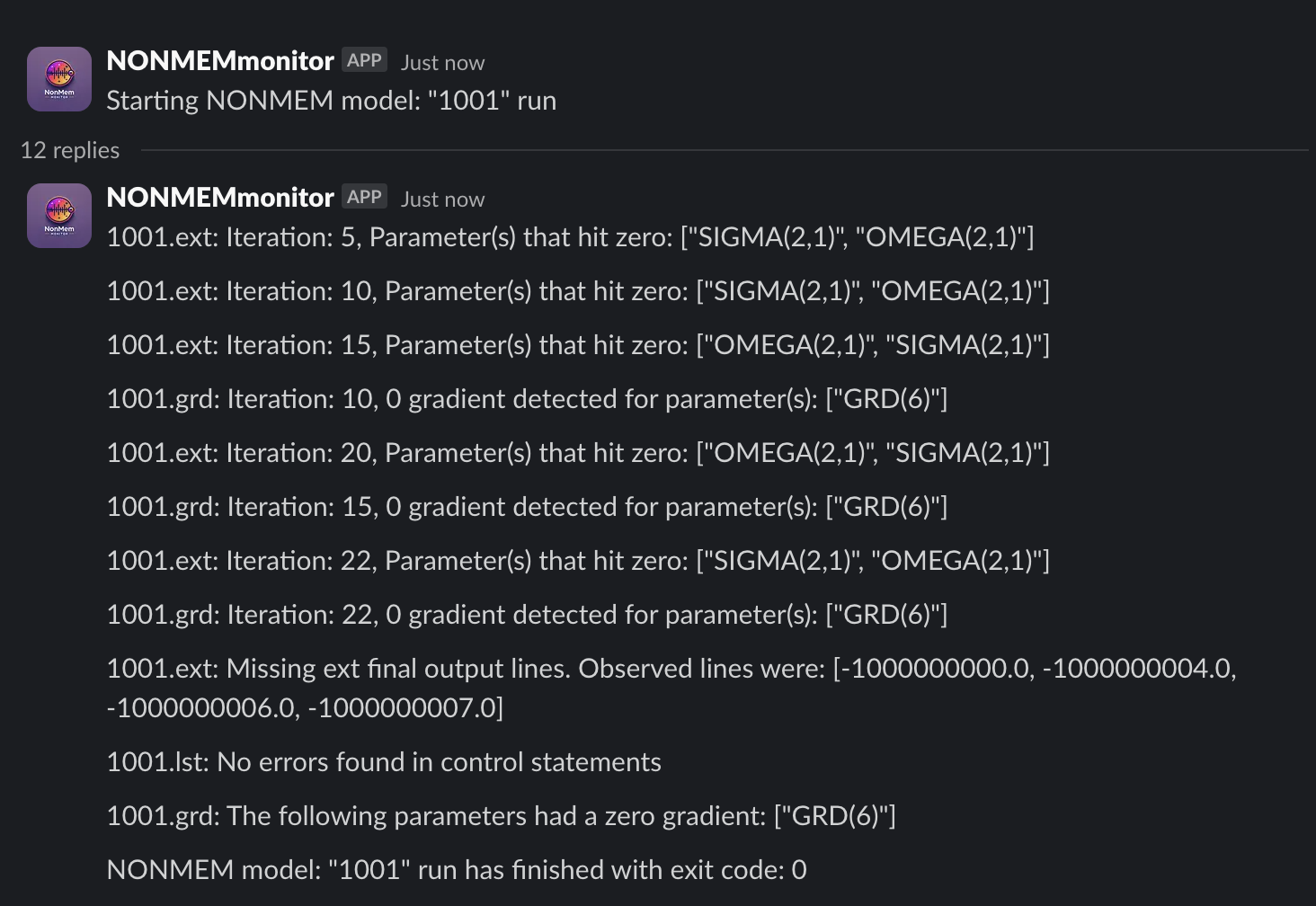

1001.toml file nmm will send slack

notifications directly to you when a NONMEM run starts and it will reply

to that message with notifications if any gradients hit 0 and when the

run finishes it checks if all -1E9 lines are present in the .ext file

and gives another message about any parameters that hit 0 gradient.

submission_nmm <- slurmtools::submit_slurm_job(

mod,

overwrite = TRUE,

slurm_job_template_path = file.path(nonmem, "slurm-job-nmm.tmpl"),

slurm_template_opts = list(

)

)

submission_nmm

#> $status

#> [1] 0

#>

#> $stdout

#> [1] "Submitted batch job 804\n"

#>

#> $stderr

#> [1] ""

#>

#> $timeout

#> [1] FALSE

slurmtools::get_slurm_jobs(user = 'matthews')

#> # A tibble: 1 × 13

#> job_id partition job_name user_name job_state time cpus standard_input

#> <int> <chr> <chr> <chr> <chr> <time> <int> <chr>

#> 1 1159 cpu2mem4gb 1001-nonmem… matthews COMPLETED 00'13" 1 /dev/null

#> # ℹ 5 more variables: standard_output <chr>, submit_time <dttm>,

#> # start_time <dttm>, end_time <dttm>, current_working_directory <chr>